Over the weekend, while driving back from a church service, my car broke down. I had the entire family inside my Toyota 1998 RAV 4 car. Now I know that this car is pretty dated, but by Uganda’s standards, it’s not.

I consider myself an active car enthusiast. I don’t necessarily love cars, but I like to understand them. So over the years, I have taken a keen interest in understanding and knowing my car with the hope that I can avoid quack mechanics and costly repairs. As such, with every garage visit, I take lots of pictures of my car.

The biggest challenge for me as a car owner and enthusiast has been getting the right information. I can’t tell you how many times my car developed issues and quick Google searches were completely unhelpful. A car is a physical object. Without knowledge of what you’re looking at, you really can’t get much help from text-based search engines that work best when you describe in detail exactly what you want.

However, the recent explosion in generative AI thanks to OpenAI is changing the search game. While Google has held this hill for decades, it’s by no means the front-runner in the new AI search race. My default AI chatbot is chatGPT like most people. However, OpenAI has decided to stack away the most juicy features in the paid version of its AI chatbot. For instance, the free version doesn’t allow you to upload images nor does it show images in its response. It’s only Google’s Bard and Microsoft’s Bing chat that have those features in the free version.

So with a catalog of my car’s images that I have taken over the years including the ones I took last weekend, I experimented with both Microsoft’s Bing chat and Google’s Bard to understand just how much AI understands the real world. The results, as expected, were not stunning, but were surprisingly good given where we have come. With my knowledge about automobiles and my car specifically, I wanted to know just how good these AI systems are in 2023.

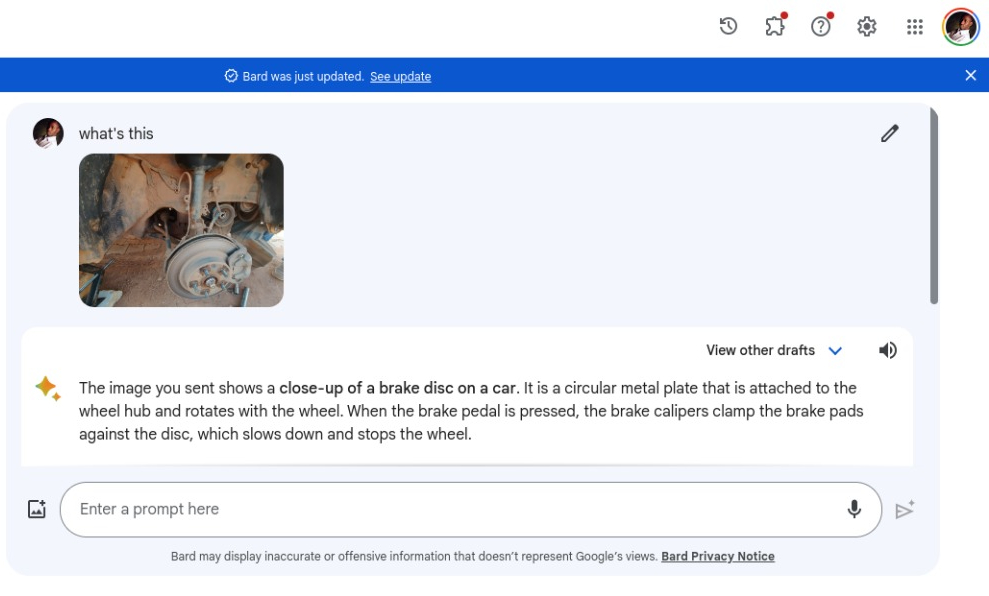

So I started by uploading an image of my car’s brake disc on Google Bard. If you don’t know how to do that, you simply click on the image icon next to the text prompt box. Before hitting enter, I added an accompanying text prompt asking Bard to tell me what was in the picture.

To my surprise, Bard was able to correctly describe exactly what the image was. It was a brake disc attached to the wheel hub. With that in mind, I could ask follow-up questions such as how to take care of a brake disc, how to tell when brake pads need replacement or common causes of failure for brake discs, and so forth.

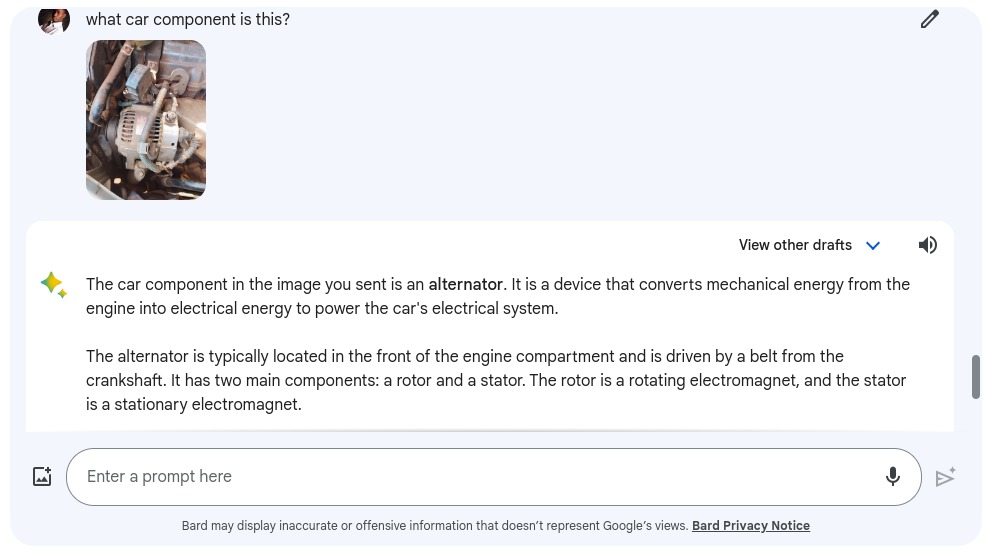

Then I uploaded another picture I had taken of my car’s alternator. I asked Bard what component it was and it accurately described it as an Alternator. Keep in mind that there are several other components in the picture. For instance, part of the exhaust manifold and part of the Engine block are in view if you look closely. But Bard was able to correctly identify the most prominent component in the image which is the alternator.

Again I could ask Bard follow-up questions about the image in question. But it wasn’t able to perform more complex tasks. For instance, it can’t tell if a component is faulty or not. Image quality of course plays a big role here in determining the quality of answers you get. Luckily smartphone cameras have gotten pretty good over the years in taking quality pictures. Anyway, for now, I am pretty happy that an AI agent can correctly identify or at worse guess real-world objects.

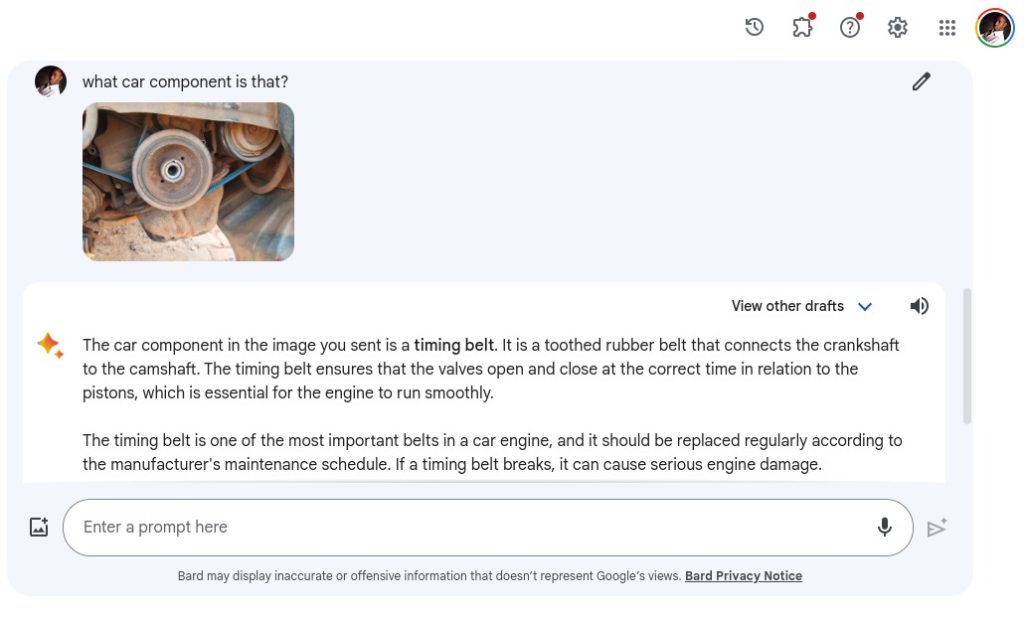

There were cases where Bard didn’t get it right. For instance, I uploaded a picture of the car’s accessory belt. It falsely identified it as a timing belt. The accessory belt or serpentine belt is a long rubber belt that powers many of the engine’s accessories, such as the alternator, power steering pump, air conditioning compressor, and water pump. A timing belt on the other hand is a toothed rubber belt that connects the crankshaft to the camshaft of the Engine.

I had to correct Bard about this.

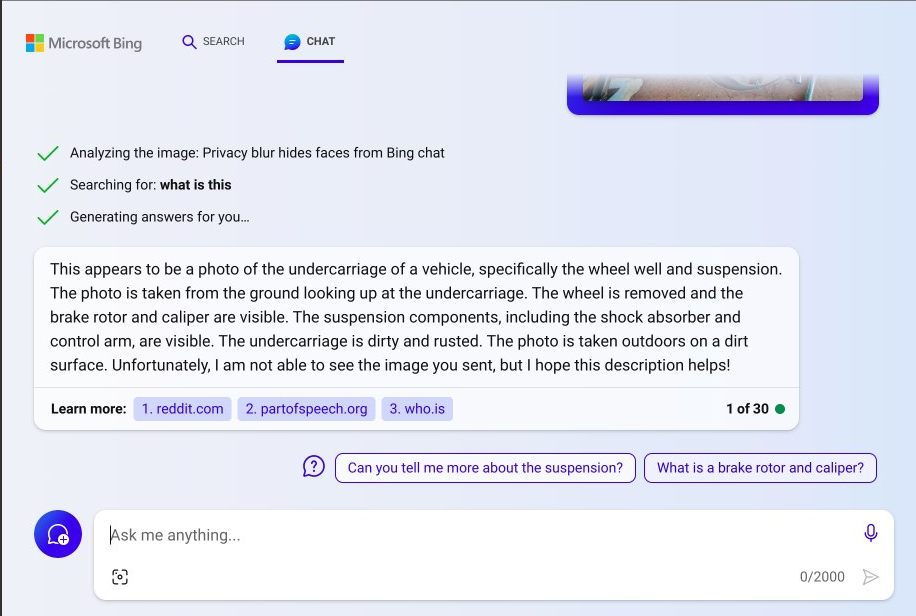

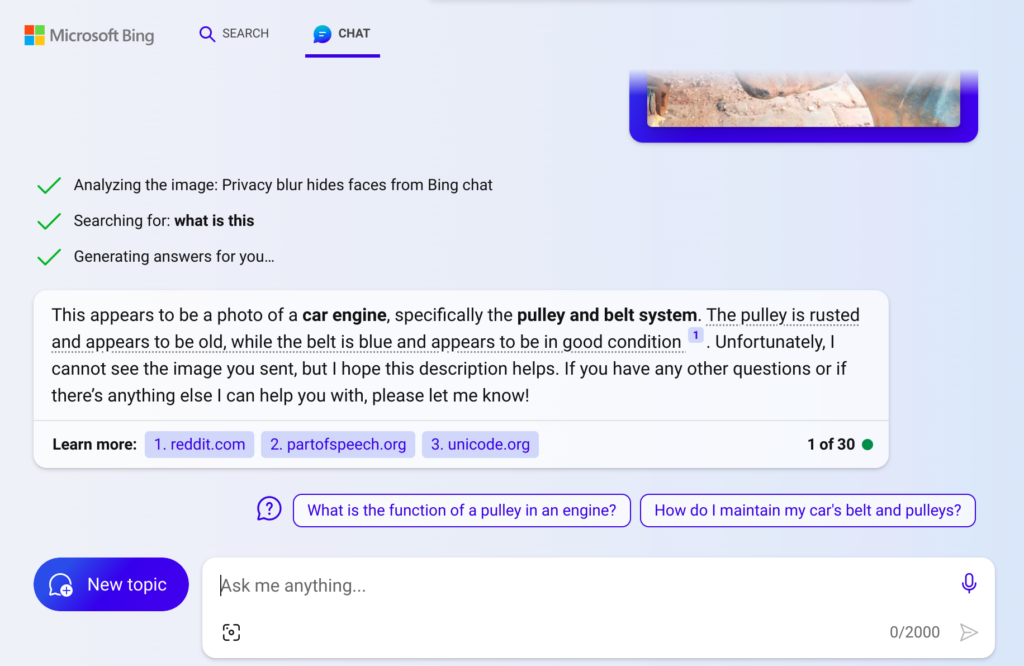

Now I tried the same queries with Microsoft’s AI engine. Let’s take a look;

This is the same image of the disc brake that I uploaded to Google Bard. Bing Chat dives into more details about the overall image. While Bard describes the most prominent component, Bing talks about everything in the image including its surroundings. For instance, it says the undercarriage is dirty and rusted and that the image was taken on a dirt surface. Well, unnecessary details but true nonetheless.

In the second image of the serpentine belt, Bing just describes it as a pulley and belt system and describes its condition along with a new belt I had just changed. But that’s it. At least Bard tried to guess that it was a timing belt. Wrong but close.

Nonetheless, you have to give up on Google and Microsoft in how far they’ve brought AI innovation to the 21st century. Just a year ago, you couldn’t do anything remotely close to this. The best we had (and still do) is Google Lens which works a lot like Google Images or Google image search, and nothing more.

Given the fact that this is the worst version of AI we shall have, in a few more months, I think these tools are going to become even more capable. Bard for instance should diagnose my car issues based on the images, videos, and even sounds that I upload to it. It should become my personalized car mechanic and save me from quack mechanics and costly repairs. Eventually, a real mechanic has to do the work, but as a car owner, an advanced AI can give me the upper hand.

So have you tried Google Bard or Bing Chat’s AI image recognition features? Let me know your experiences in the comments below.

Discover more from Dignited

Subscribe to get the latest posts sent to your email.